Introduction

In the past years I heard a lot of bad excuses when it came to NuGet package management. Mentioning Nexus in many .Net shops I had a chance to work in, put a strange ‘fear feeling’ in the air. None of those two chaps are to be in fear off and, surprise surprise, they work exceptionally well together. In this post I would like to tell you how to set up a NuGet proxy repository and a local, private, NuGet repository on Nexus Repository Manager OSS. I will guide you through the installation of Nexus Repository Manager OSS and all of the necessary setup tasks in order to create your own NuGet server that will proxy the calls to NuGet.org and store your own packages. I will mentions also some details about the configuration and maintenance and show you how to set up your tooling in order to work with it. It is going to be a pretty long post, so if interested, keep on reading. I hope that I can convince you to give a change to Nexus and get the best out NuGet.

Prerequisites

In order ton install Nexus you need to make sure that a couple of things are in place.

First thing, make sure JRE is installed. In the command prompt run java -version. If command executed successfully you are ready to go. If not, download the right version for your OS at http://www.java.com/en/download/manual.jsp. Still make sure that you are running at least JRE version 8u31+, as it is strongly recommended by SonaType.

Operating system requirements are specified at the following link https://support.sonatype.com/hc/en-us/articles/213464208-Sonatype-Nexus-System-Requirements.

Download the latest version of SonaType Nexus at http://www.sonatype.org/nexus/go/. At the moment of writing the current version is 2.12.0.

Installing Nexus

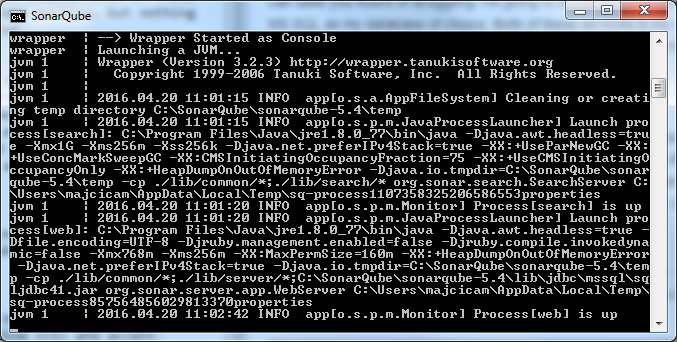

In order to install Nexus Repository Manager OSS, you need to extract the content of the downloaded file inside a directory. In my case this will be D:\Nexus. Once done get inside a folder called nexus-2.x.x-xx\bin and execute the following commands, nexus install, and once done, nexus start. You will see something similar to this:

D:\Nexus\nexus-2.12.0-01\bin>nexus install

wrapper | nexus installed.

D:\Nexus\nexus-2.12.0-01\bin>nexus start

wrapper | Starting the nexus service...

wrapper | Waiting to start...

wrapper | Waiting to start...

wrapper | Waiting to start...

wrapper | Waiting to start...

wrapper | Waiting to start...

wrapper | nexus started.

D:\Nexus\nexus-2.12.0-01\bin>

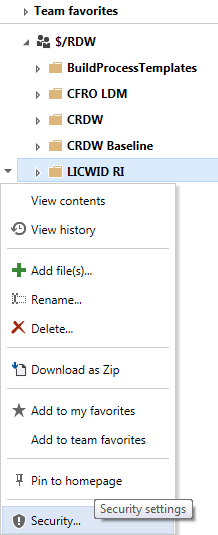

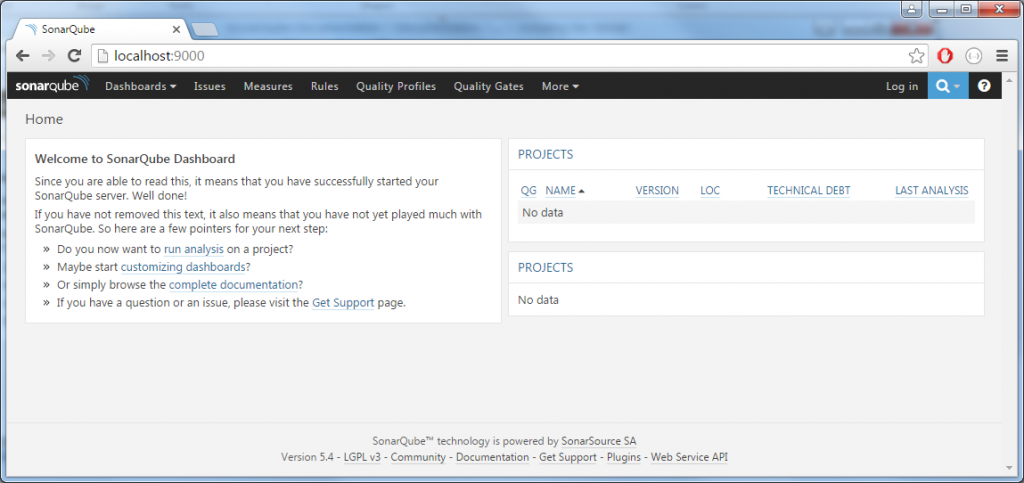

Now let’s get on the default address http://localhost:8081/nexus.

If all went well you should see the following:

![welcome_screen]()

Login by following the link in the up-right corner. Default administrative account is admin with a password equal to admin123. Once logged in you will see in the menu on the left side additional options. We are now ready to start configuring our repositories.

I also advise you to check the list of recommended post installation configuration steps in the Post-Install Checklist.

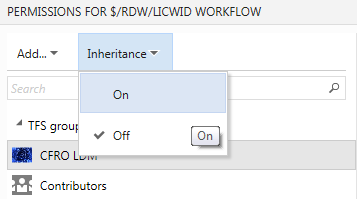

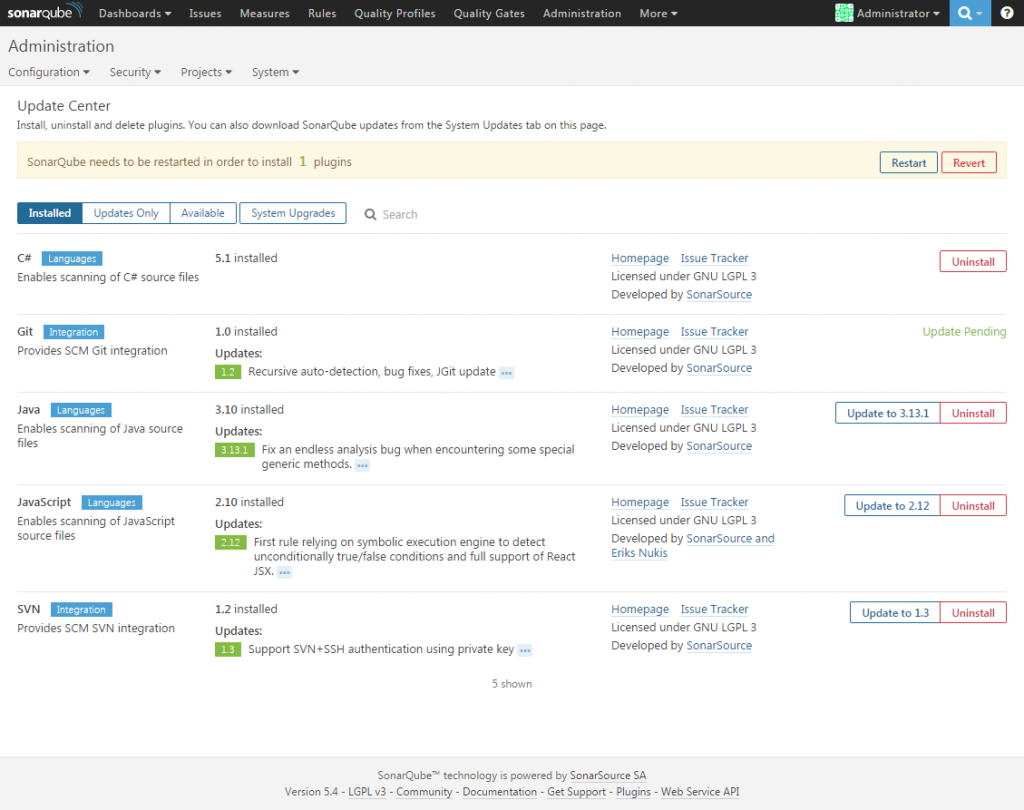

Nuget proxy repository

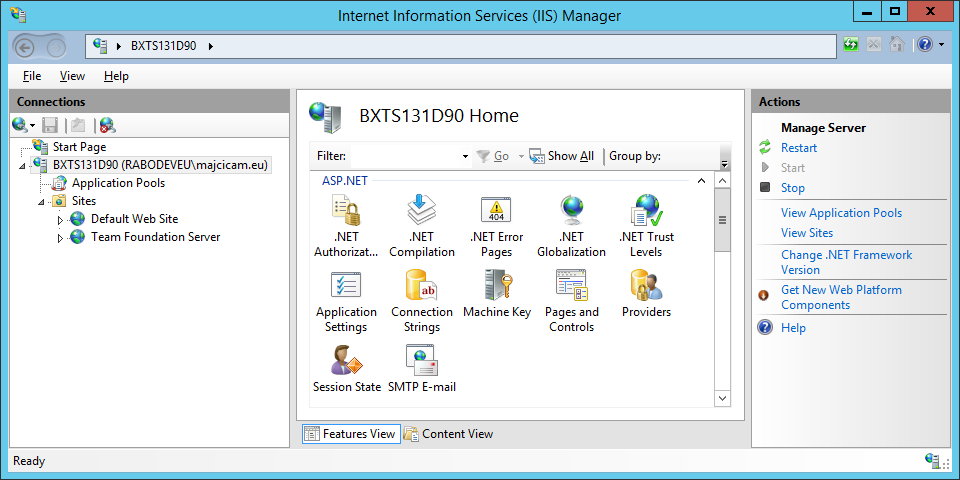

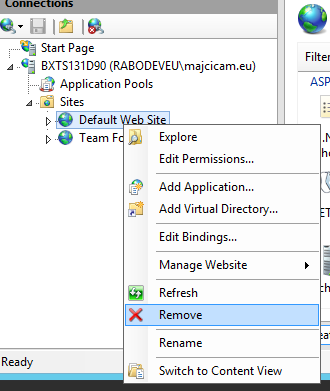

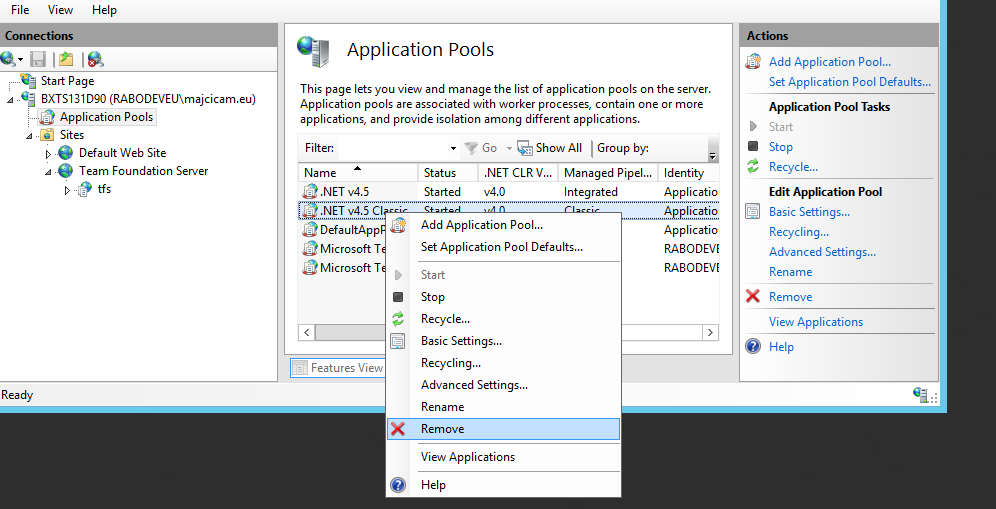

By default, Nexus Repository Manager OSS ships pre-configured with several repositories. If you intend to used it only as a Nuget repository/proxy you can remove all of them.

![repositories]()

This happens to be my case, so I will remove all of the pre-configured repositories. Once you delete all of them, you should see a message saying, No repositories defined.

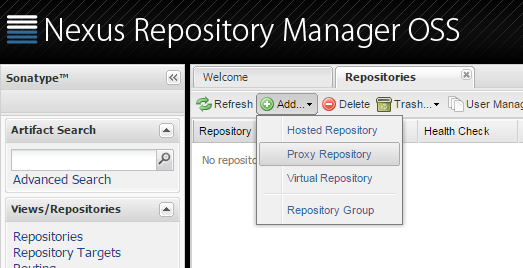

I will now add a repository that will proxy calls towards the Nuget.org public repository. Click on add a choose Proxy repository.

![add_proxy_repo]()

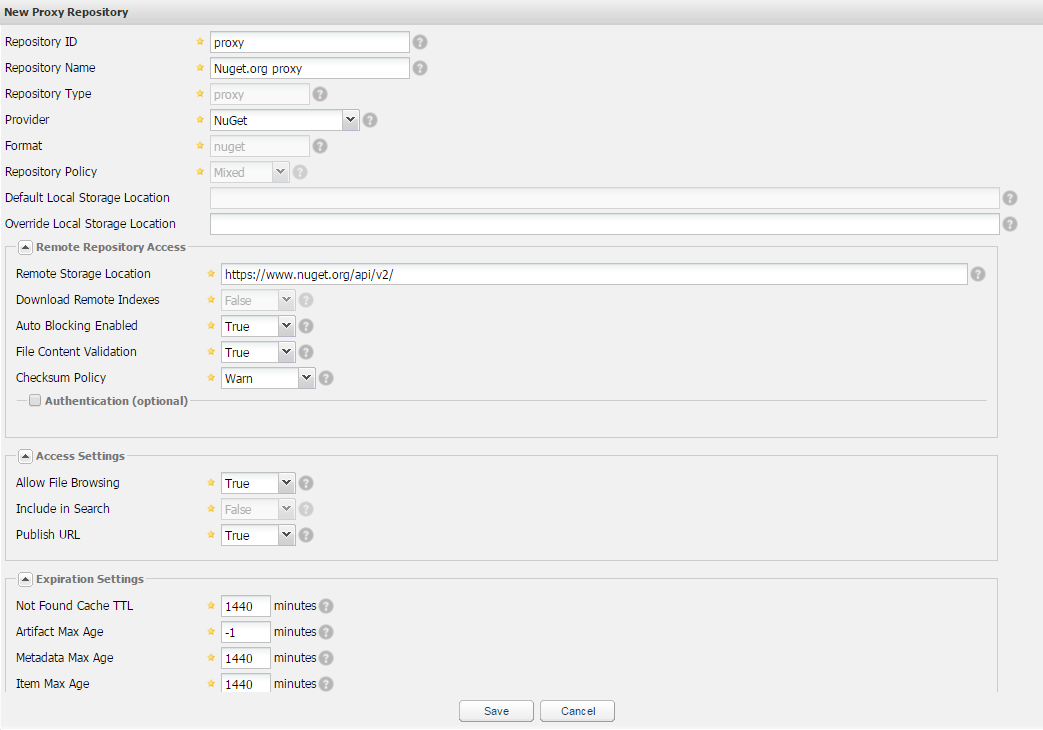

At the bottom of the screen you will be asked to fill in all the necessary details. Let’s analyse the important ones.

Repository ID: I am going to choose a vary concise name here, as this will be a part of our feed url. I will set it to proxy.

Repository Name: This is just a name that will be assigned to this repository and it will only be visible from the Nexus repositories management interface. I will set it to Nuget.org proxy.

Provider: It needs to be set to NuGet.

Remote Storage Location: Here we will set the link to the NuGet.org feed. Currently it is https://www.nuget.org/api/v2/

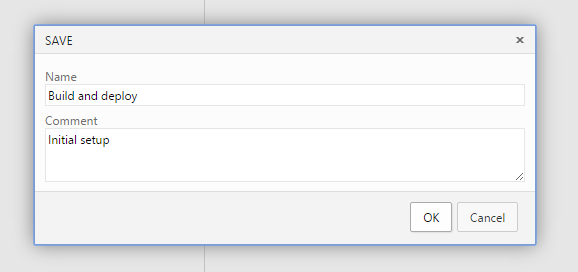

This are all of the necessary parameters. Once filled in, you can click on Save.

![new_proxy_repo]()

If you now select the just created repository and then open the NuGet tab, you will find the link to your feed. In my case it is http://localhost:8081/nexus/service/local/nuget/proxy/.

First part of the link is obviously wrong. You will not be able to use this feed from any other machine than the server itself. It is displayed as local host as that is the url that we used to access the administration interface. It needs to be http://yournexusservername:8081/nexus/service/local/nuget/proxy/ where yournexusservername is the name of the machine. We will see later how to influence this url.

Nuget private repository

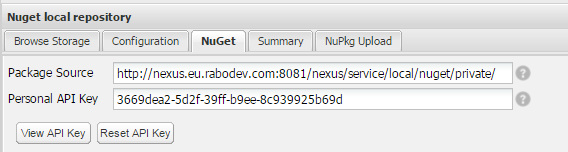

In case you have your own, private NuGet packages, that you would like to publish and retrieve, Nexus Repository Manager OSS can help you with that too. You need to create a hosted repository. For this repository you need to set the same settings as for the proxy, except the Remote Storage Location. I’ve set Repository ID to ‘private’ and Repository Name to ‘Nuget local repository’.

Once created, under the NuGet tab, you will find the personal API key that you need to use via NuGet.exe in order to push a package to this repository.

![api_key]()

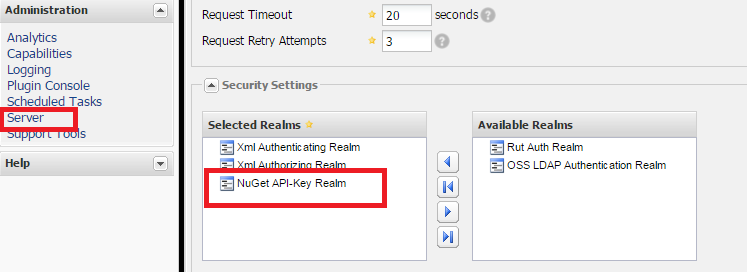

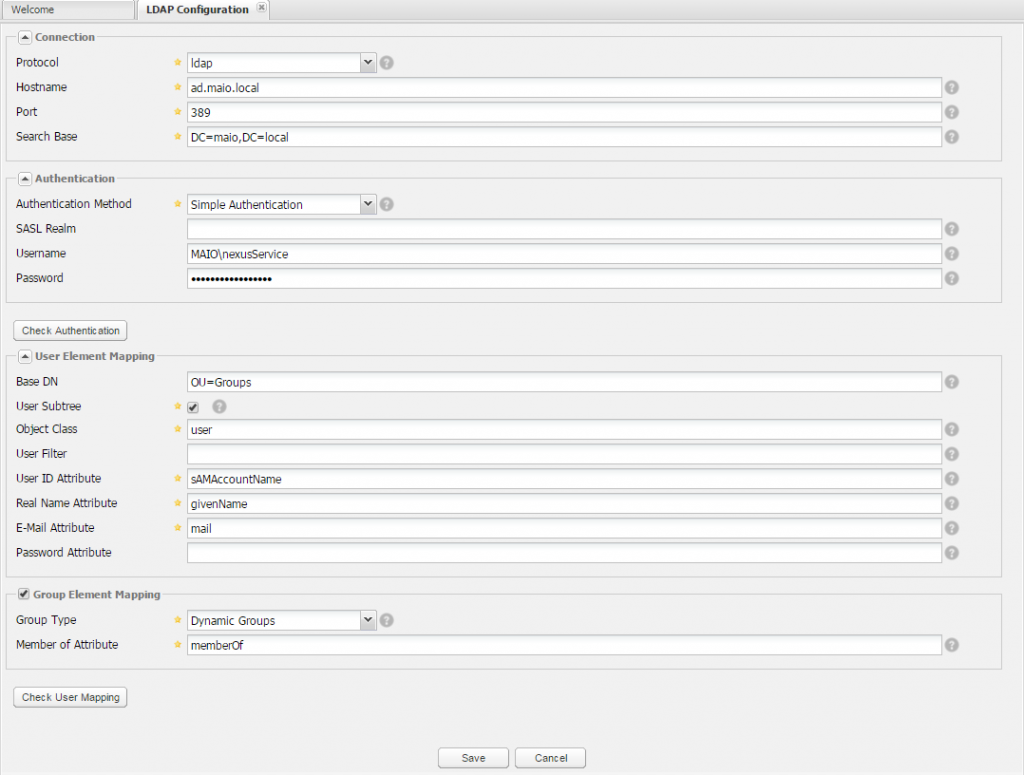

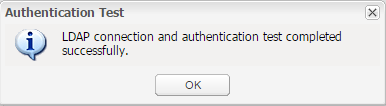

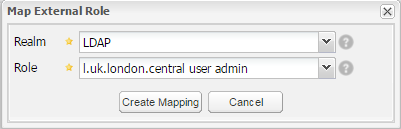

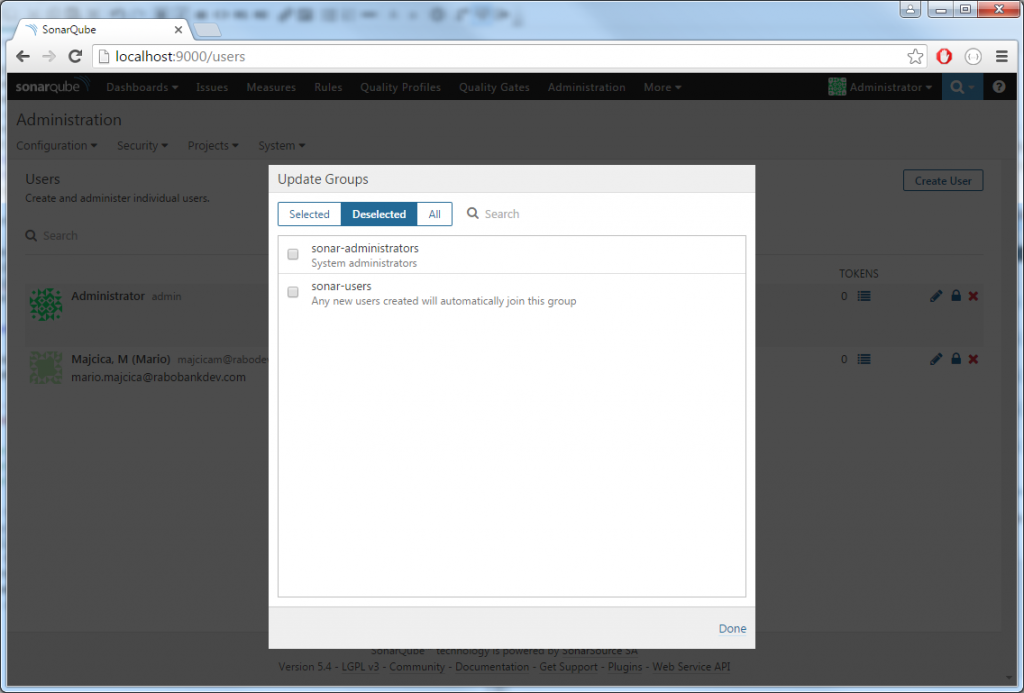

One last step is necessary before you get to be able to upload your packages. We need to enable the authentication and to do so, you will need to set NuGet API-Key Realm.

![security_settings_realms]()

Under the Server settings, make sure that NuGet API-Key Realm is under the Selected Realms, which by default is NOT.

Now you can set your Api Key and push your package.

Api keys are on per user basis generated. Each user has it’s own unique key, and if you intend to upload the packages under a specific user profile, make sure you get the right key. Log in as that user and check the above mentioned property.

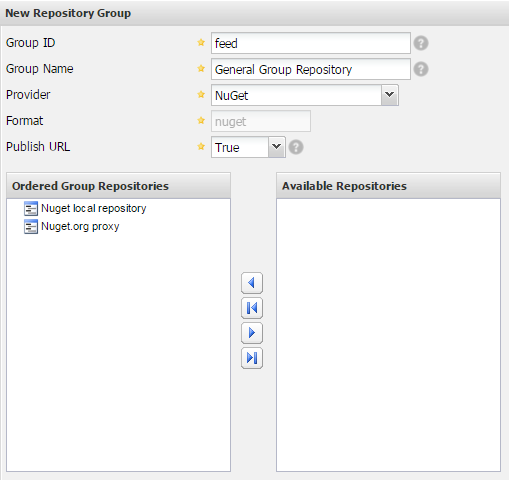

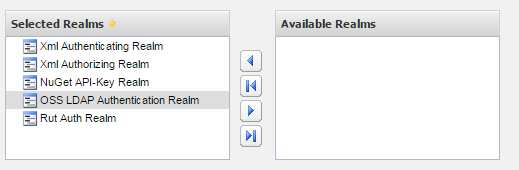

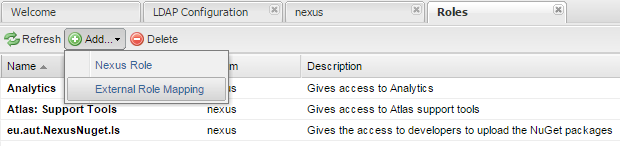

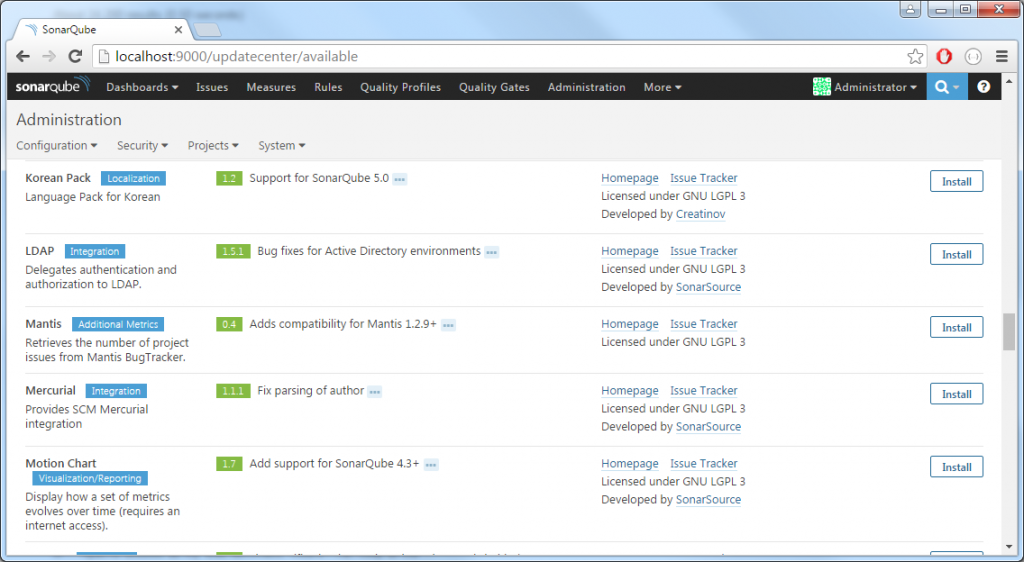

Living under the same roof

Having multiple repositories and feeds can be unhandy to setup and maintain. Lucky Nexus Repository Manager OSS offers a solution that can consolidate multiple repositories under a single feed. A repository group hides multiple repositories behind a single address/feed and allows us to modify the underlying repositories, add new ones or make other changes, without the need to change the configuration in any of your projects or in the tooling. Also it gives a lot of flexibility in setting up different policies and strategies in managing your repositories.

In order to create a Repository Group, under the repository management, choose Add then Repository Group option.

![new_repository_group]()

Same settings are mandatory as for the private repository with one addition. You need to choose which repositories will be part of this group.

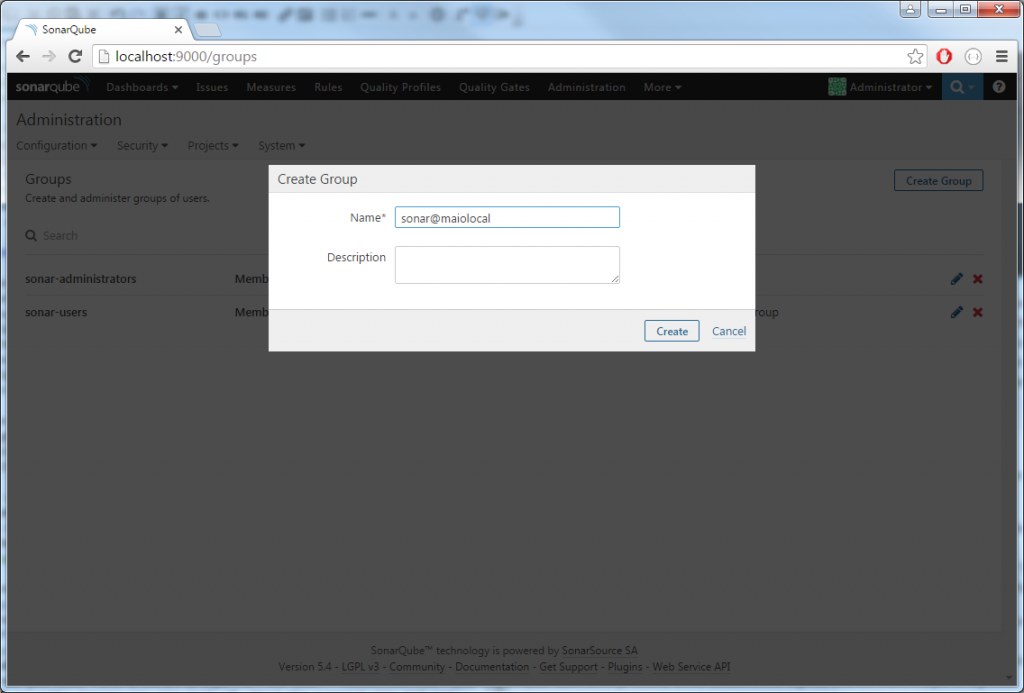

Tidying up

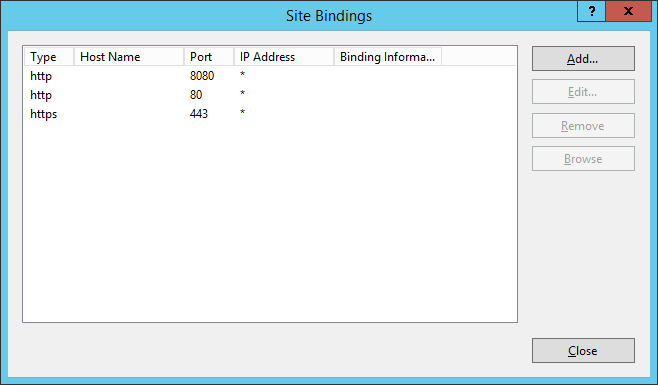

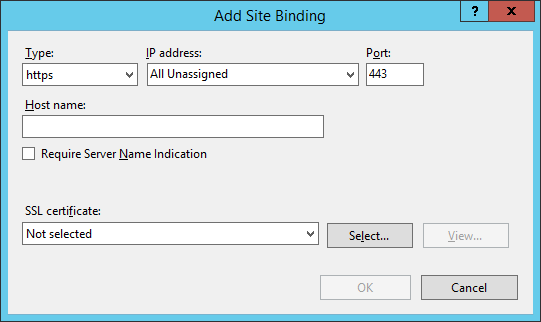

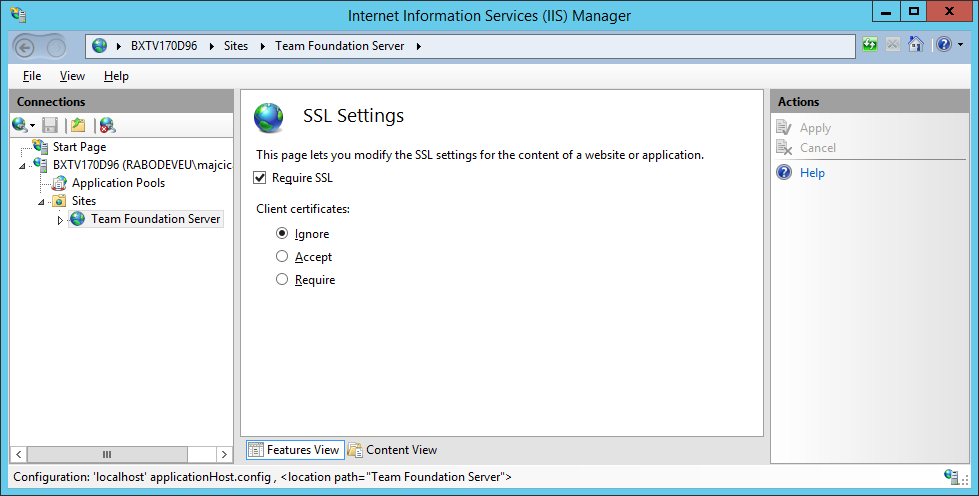

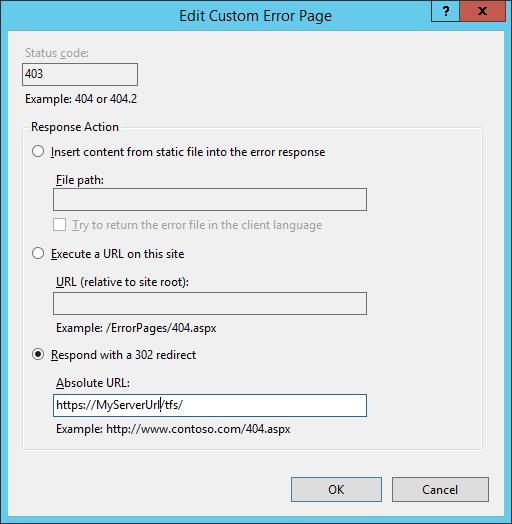

Link to our feed as it is right now is quite verbose and hard to recall, http://yournexusservername:8081/nexus/service/local/nuget/proxy/. Instead of pointing to yournexusservername we may consider adding a CNAME into our DNS and then point it to that machine. This will also ease the maintenance later on, if we do decide to move the service to another machine. In that case it will be sufficient to re-point DNS to the new machine and no changes to the feed URL will be necessary.

Another thing that stands out is that Nexus Repository Manager OSS web server as it’s feeds do run on port 8081. If this is an option, it will be easier to run it on port 80 (or 443 in case you plan to use SSL). you can also notice that ‘nexus’ part of URL (context root) just after the port number, it also may not be necessary and removing it will shorten all of the url’s.

I registered a CNAME in my domain and called it nexus. Now by calling http://nexus.maio.local:8081/nexus I’m able to reach my server. In order to get server running on port 80 and remove that nexus suffix, we need to get to the conf folder (in my case D:\Nexus\nexus-2.12.0-01\conf) and edit the file called nexus.properties. We are interested in changing two properties under # Jetty section. First one is application-port which by default is set to 8081. You need to set this to 80. Second property we are going to change is nexus-webapp-context-path and we need to change if from /nexus to just a /. This will set the context root with no prefix and make our URL shorter.

At the end your configuration file should look like following:

...

# Jetty section

application-port=80

application-host=0.0.0.0

nexus-webapp=${bundleBasedir}/nexus

nexus-webapp-context-path=/

...

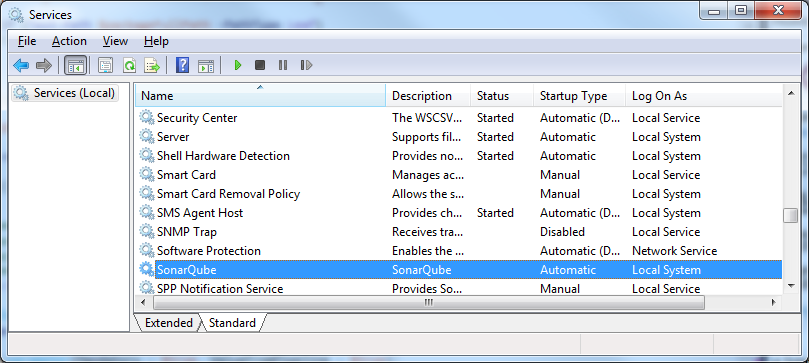

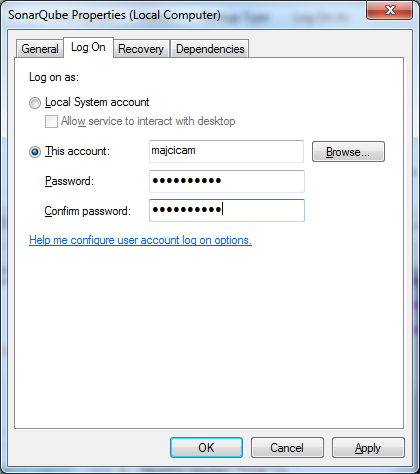

Now it will be sufficient to restart the nexus windows service in order the changes to take the effect. In case no other application is using the port 80, restart should succeed and we are now able to get to the administrative interface by just calling http://nexus.maio.local/.

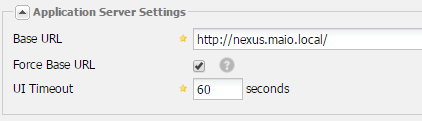

Also my feed now changes to http://nexus.maio.local/service/local/nuget/proxy/ which is shorter and easier to remember.

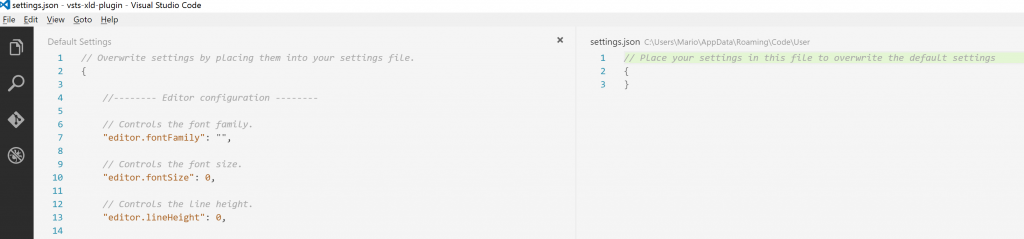

To make sure that the URL’s are not based on the upcoming request, you will need to set the base URL in the server settings.

![application_server_settings]()

Set the base URL accordingly to your CNAME and remove the nexus from context root. You would like also to check the Force Base URL option as then the current settings will be considered for all of the links and Nexus Repository Manager will not base any on the upcoming request URL.

Other considerations

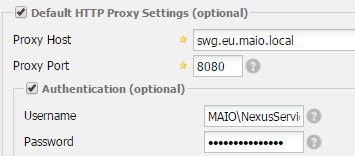

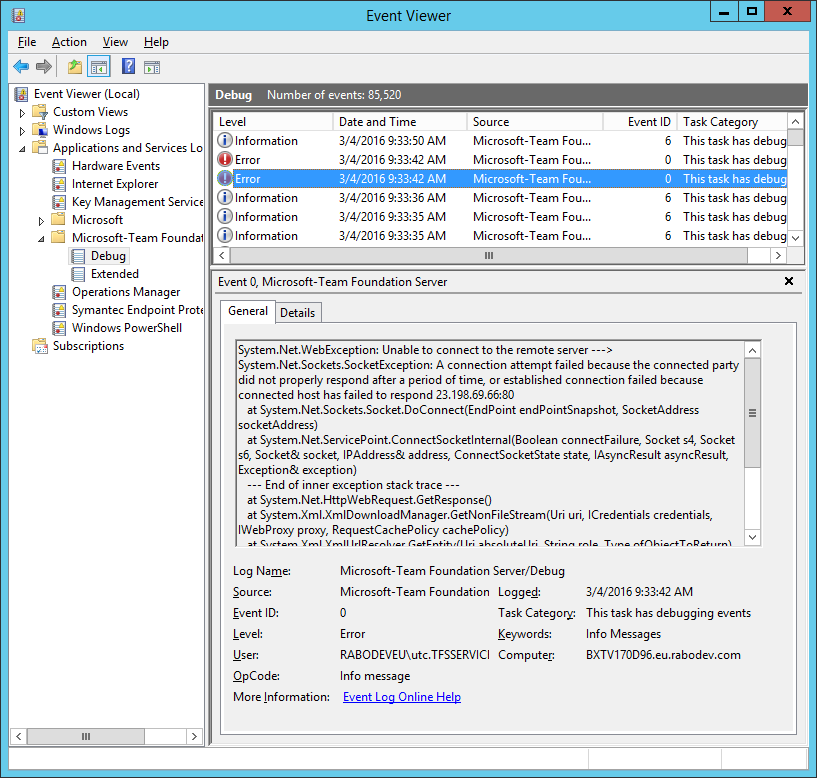

Proxy

In case your Nexus Repository Manager is behind a proxy you will need to set the relevant settings inside the Server panel. Make sure that you set both HTTP and HTTPS settings as NuGet.org feed uses HTTPS.

![http_proxy_settings]()

It is sufficient to indicate the proxy host, port and the Authentication credentials (Username and password).

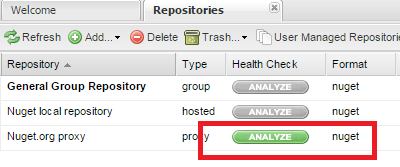

License and Vulnerability Tracking

This is a very neat feature that is offered by Nexus Repository Manager OSS. For our proxied repository we are able to activate Repository Health Check. If active Nexus Repository Manager OSS will return actionable quality, security, and licensing information about the open source components in the repository. This will give us an overview of the mentioned metrics for all of the packages that were served by our proxy feed. To enable it, click on the analyse button and after some time you will see the results.

![activate_rhc]()

On the SonaType blog you can find a video interview made to Marcel de Vries that talks this argument in the detail.

Maintenance

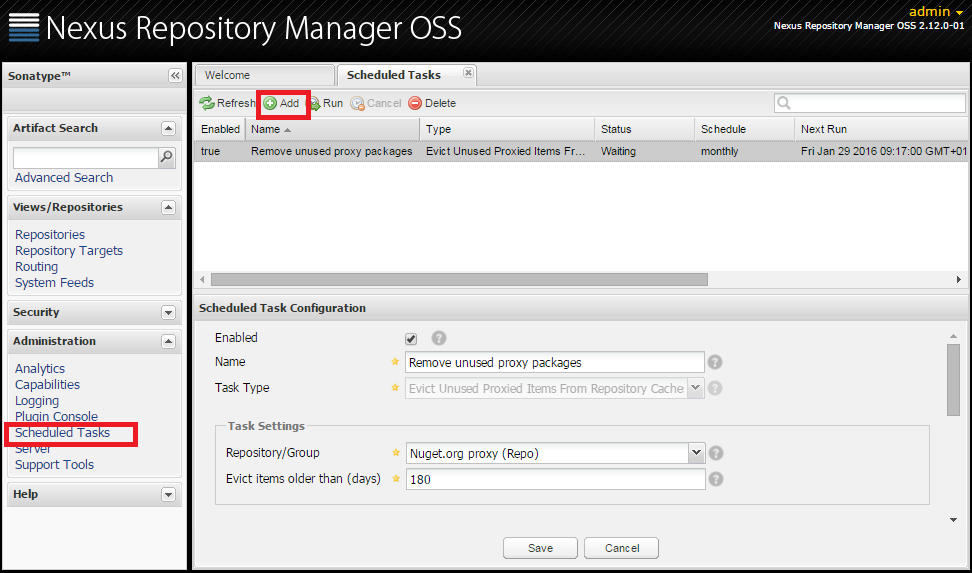

In case you are planning to use your proxy intensively you may be concerned about the disk space it may require to support all of the packages that are cached by the proxy. In that case you could opt for an out of the box task. If you open the Scheduled Tasks panel and Add a new scheduled task, there is a predefined task that will do just that.

![scheduled_tasks]()

Create a Scheduled task of type ‘Evict Unused Proxied Items From Repository Caches’. On a given schedule it will remove all of the items for the chosen repository that are older than a number of days you set.

Support and documentation

Nexus is a well documented project. You can find more information about all of the arguments treated in this blog post and much more at http://books.sonatype.com/nexus-book/reference/index.html. Also in case you encounter a problem and can’t find an answer about, there is a support even for a free OSS version at https://support.sonatype.com/hc/en-us/categories/201980798.

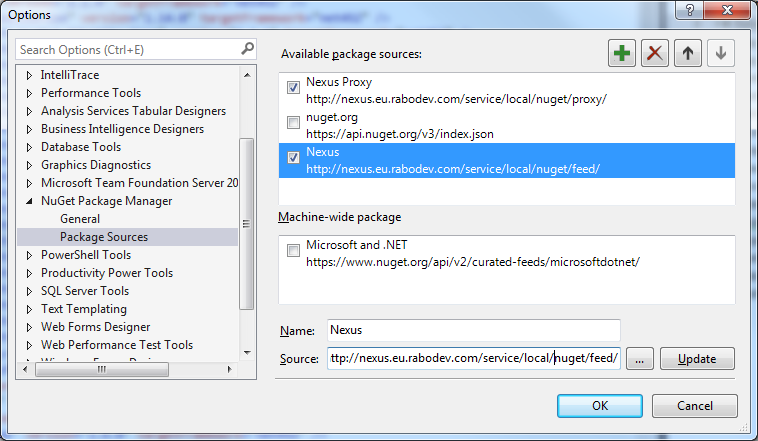

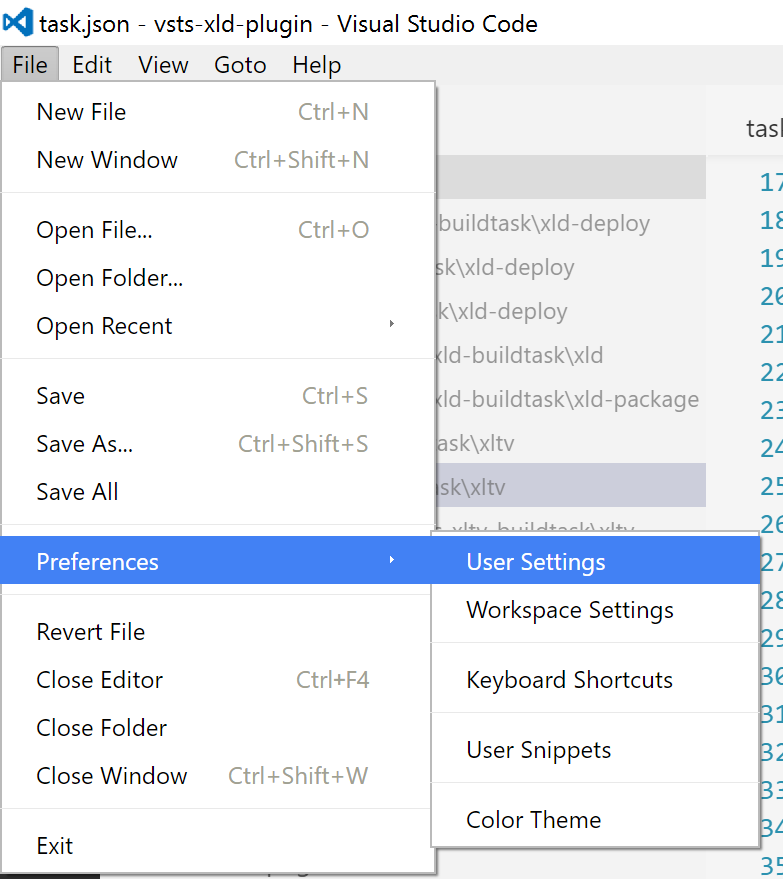

Visual Studio feed

Adding a new feed in Visual Studio is an ordinary task. Select the following menu Tools > NuGet Package Manager > Package Manager Setting. Then in the Options window, select Package Sources and add a new one by clicking on the plus button. You can name the new feed to Nexus and set the source to http://nexus.maio.local/service/local/nuget/feed/. Press Update, then OK.

![package_sources_options]()

This will allow you to access NuGet.org through Nexus Repository Manager OSS in Visual Studio.

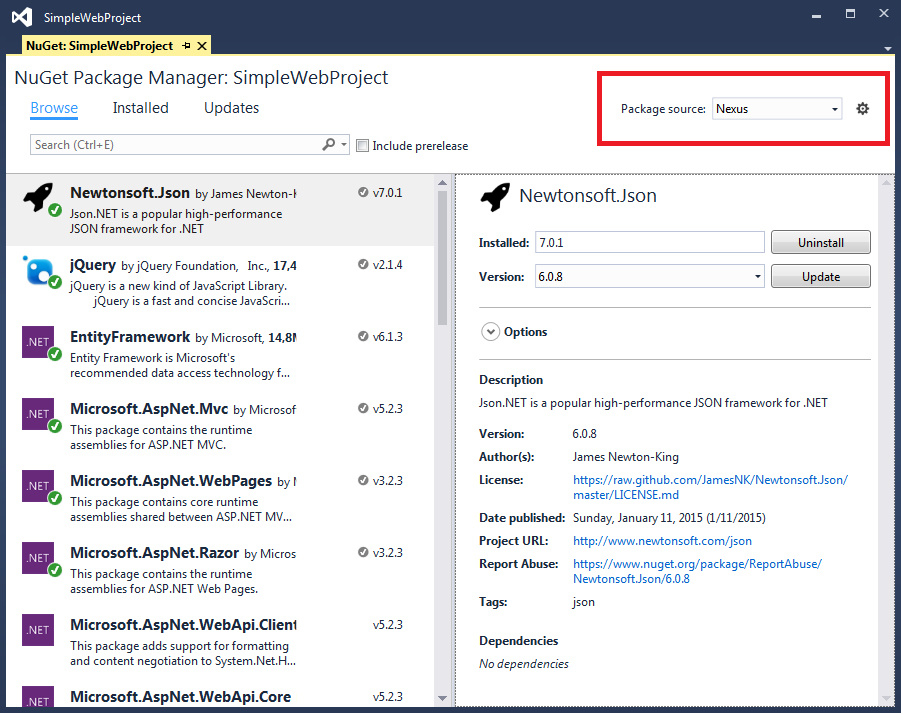

Now in the NuGet Package Manager select the newly added Package Source and you are ready to browse and install the available packages.

![nuget_package_manager]()

If you added a group feed, you will be able to retrive both NuGet.org and your private packages through the same feed.

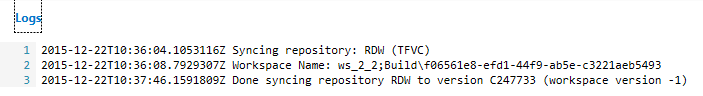

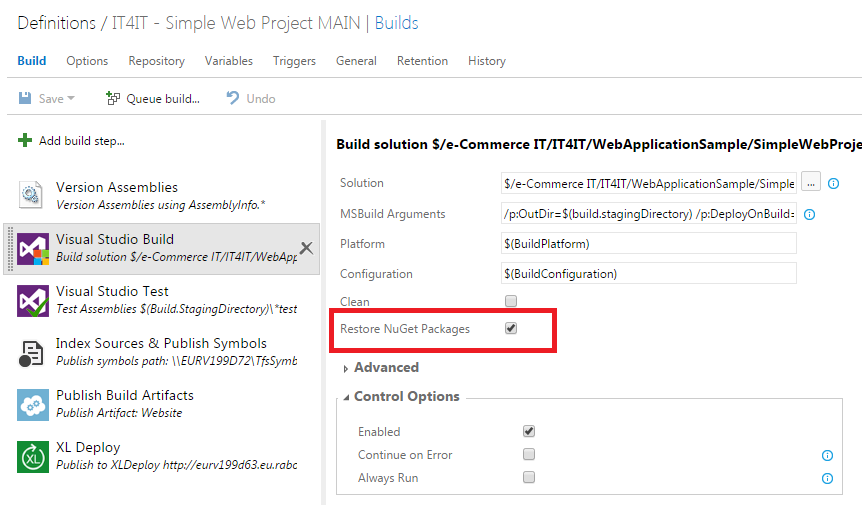

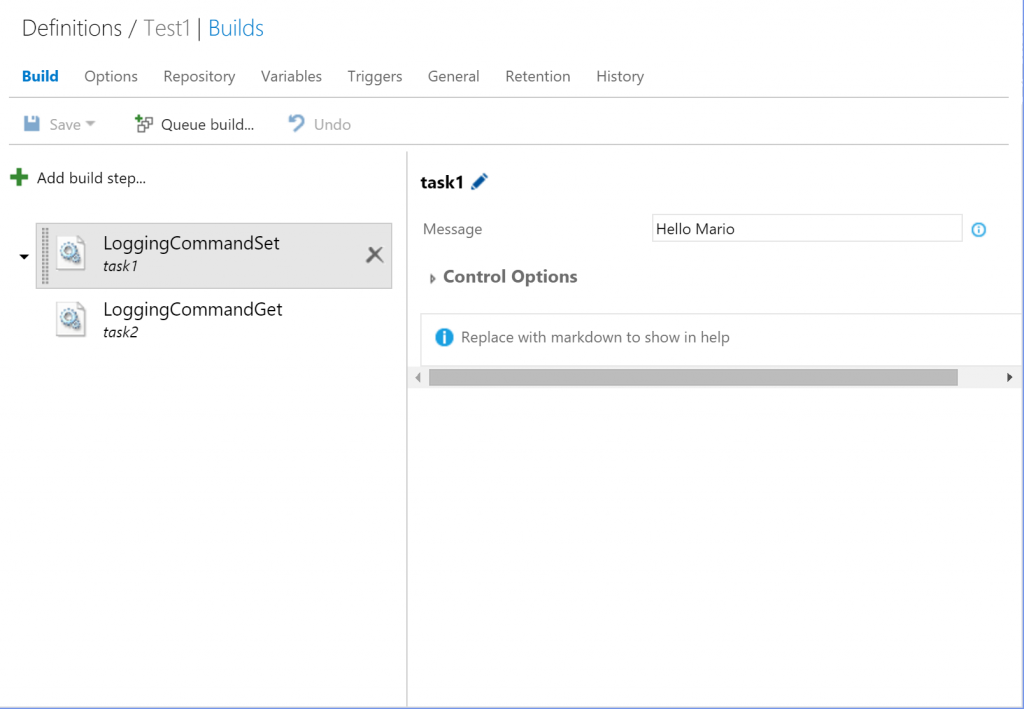

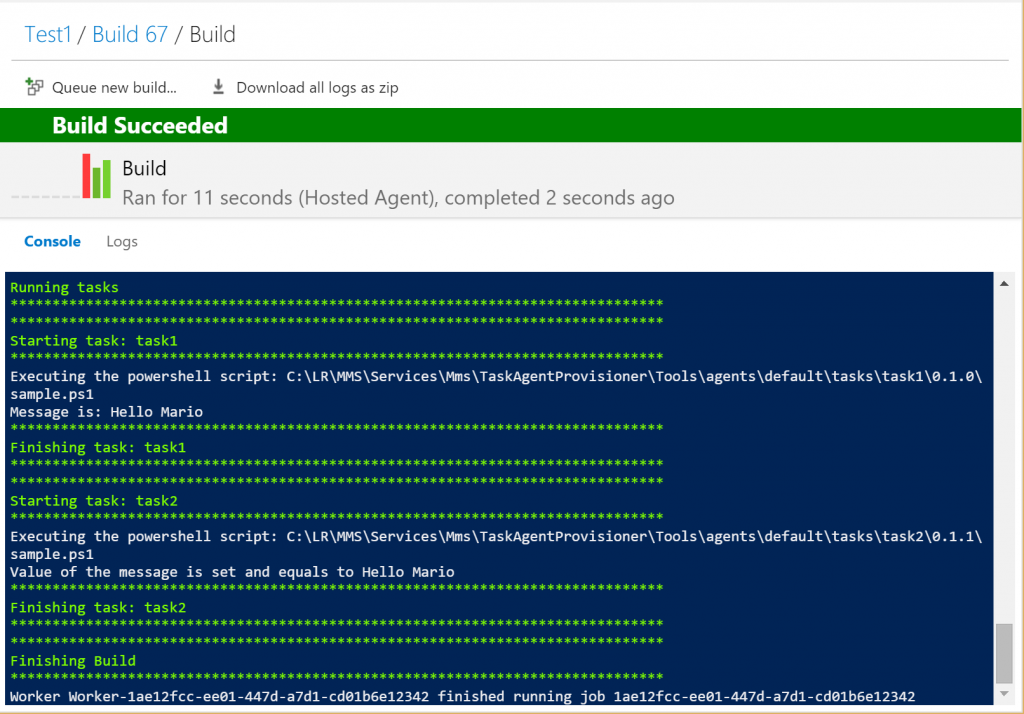

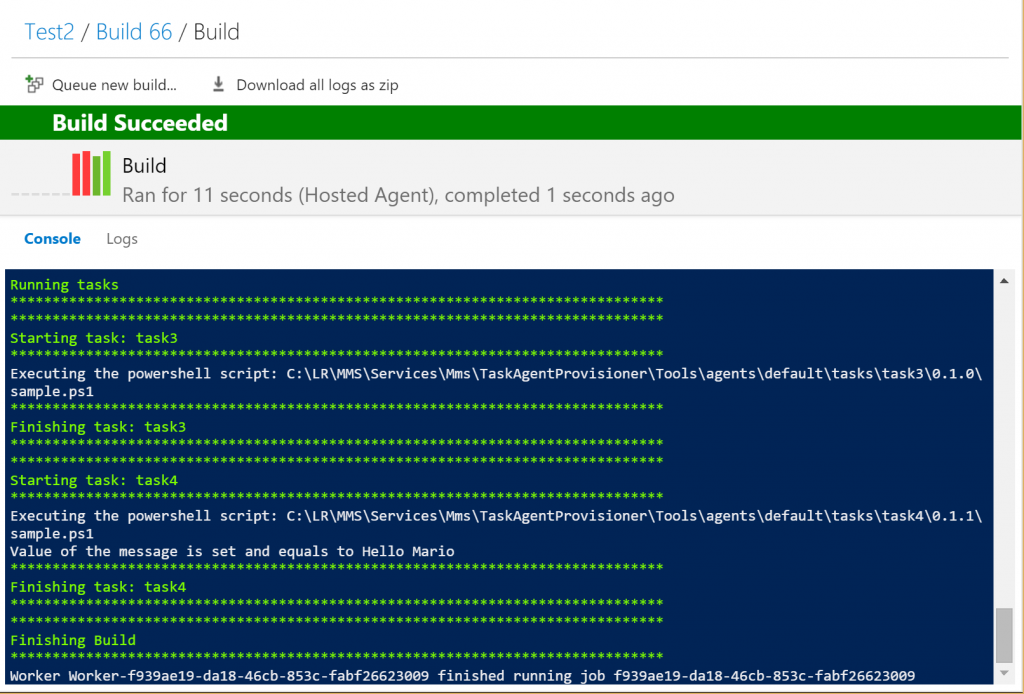

Build agent package restore

Before we enable the NuGet package restore in our build task we need to make sure that we have the necessary inside our nuget.config file. If you do not have a nuget.config file, you can place it in one of the paths that are listed in NuGet documentation.

In the package sources you need to add the new feed. Consider the following example.

<?xml version="1.0" encoding="utf-8"?>

<configuration>

<packageSources>

<add key="Nexus" value="http://nexus.maio.local/service/local/nuget/feed/" />

</packageSources>

</configuration>

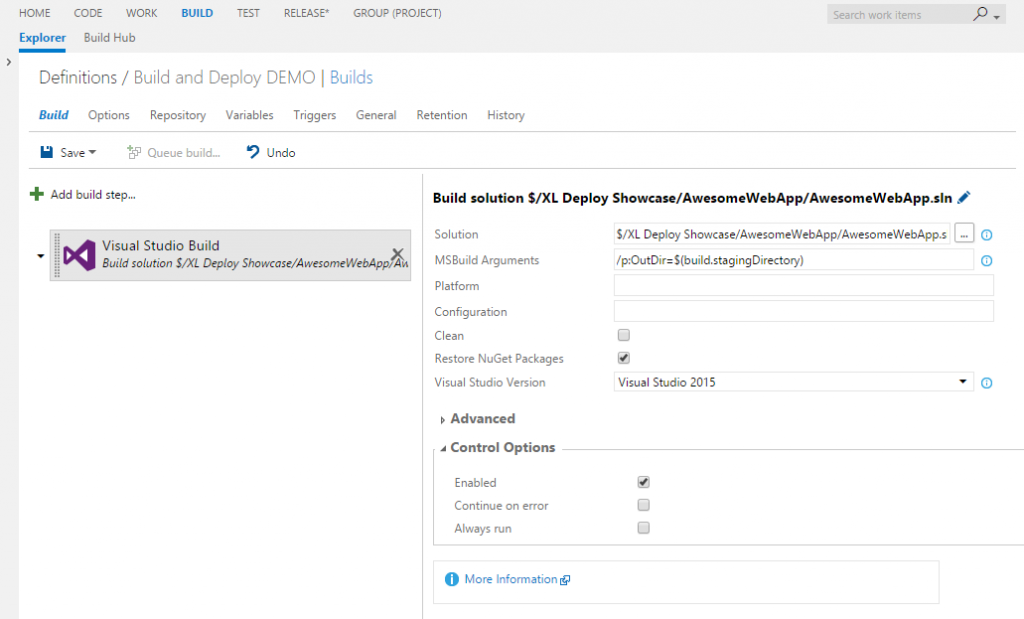

Now you need to check in this file and enable the package restore on your build task.

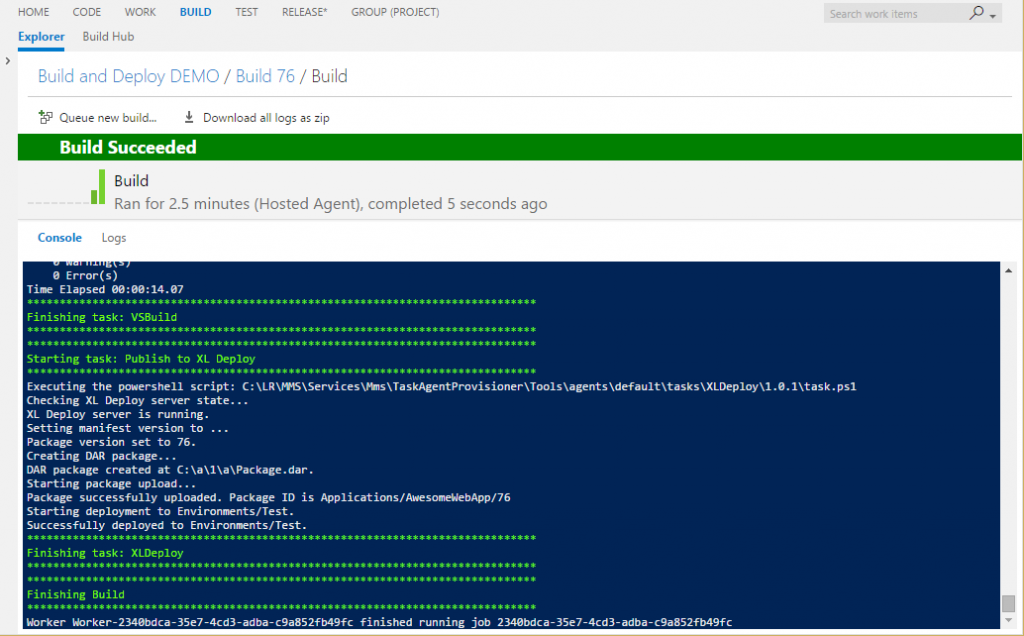

![build_task]()

This should be now sufficient for the build agent to retrieve all of the necessary packages via Nexus Repository Manager OSS.

You can now remove the packages folder from the source code and request a clean build. In the build console then you will find references about packages being restored.

Manually uploading a package

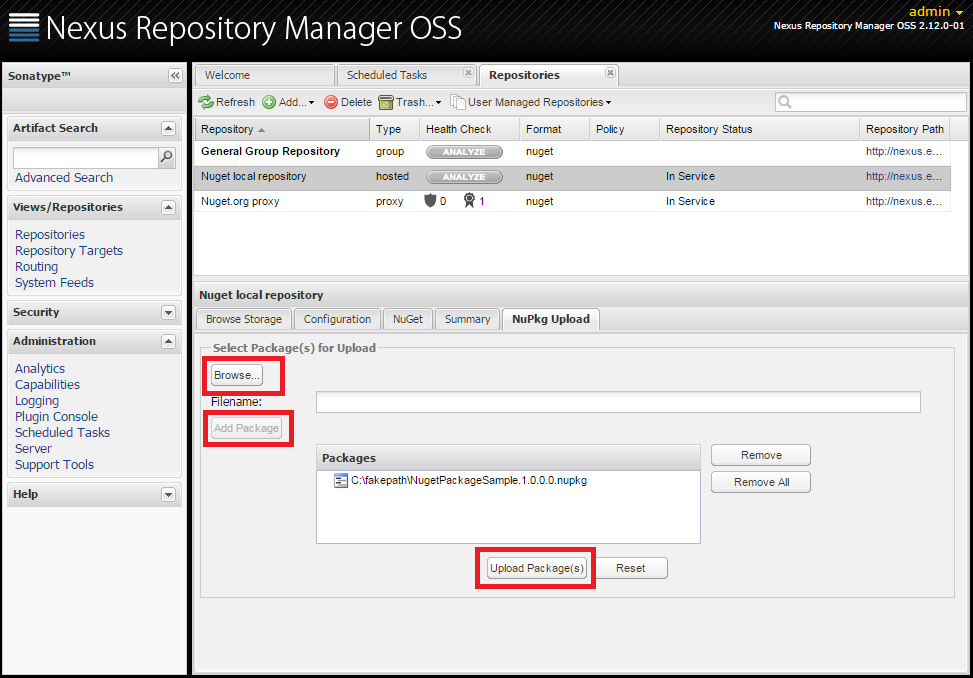

Our custom NuGet packages can be uploaded via the Nexus Repository Manager OSS web interface. Select your repository and go to the NuPkg Upload tab.

![nupkg_upload]()

In that tab, select Browse and choose your .nupkg file. Then click on Add package and if that is the only one you would like to upload, choose Upload Package(s) button. If all goes well you will see the upload confirmation message. If you get to browse storage tab and refresh it, you should see your package uploaded.

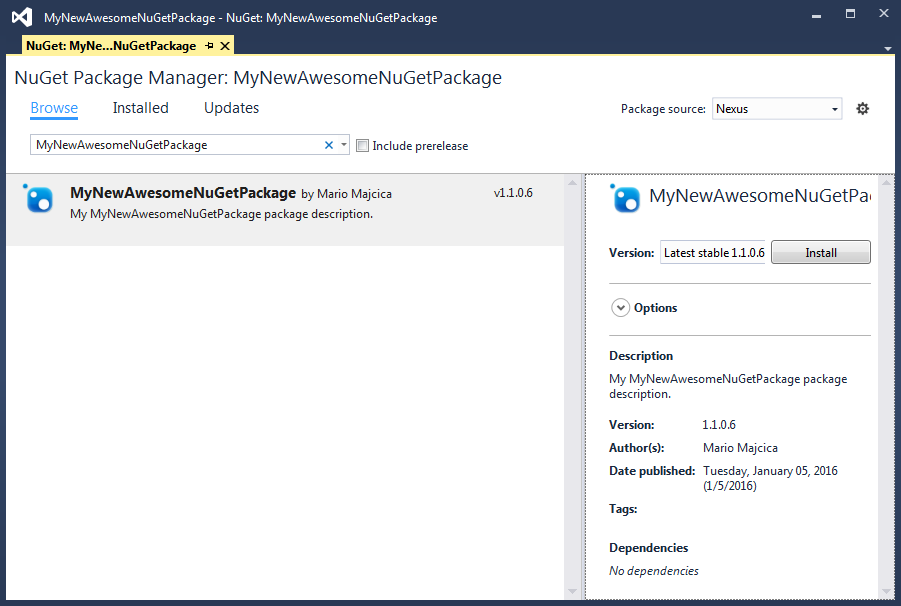

To be sure that we can retrieve our custom package (also via the group repository) let’s get in Visual Studio and search for it.

![search_hosted_package]()

As you can see, we are able to find our package both via our hosted repository feed as also via the group repository. This shows all of the potential of the group repositories and the way it eases the maintenance.

I came in an situation in which I was not able to find via group repository the package I uploaded. In case you upload a package that is present (matches the name and version of the package) in your proxy repository (NuGet.org), your package will not be shown. This is however an edge case and in real life situation you should never encounter such a situation. Still I think it is worth mentioning as during a trial it can happen and I do not want you to freak out about it.

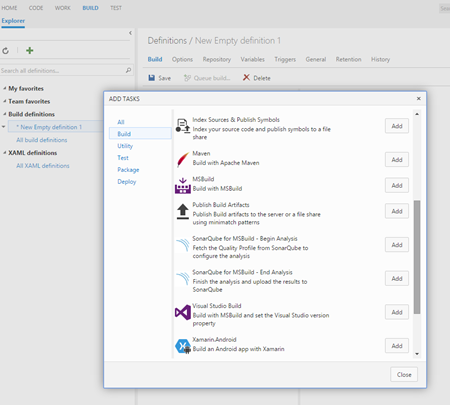

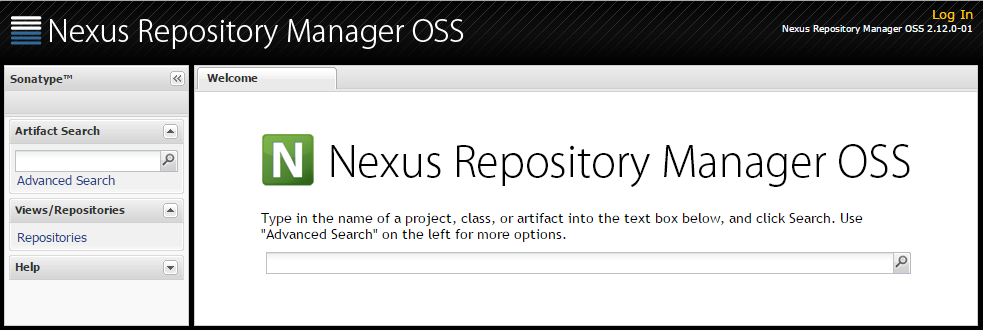

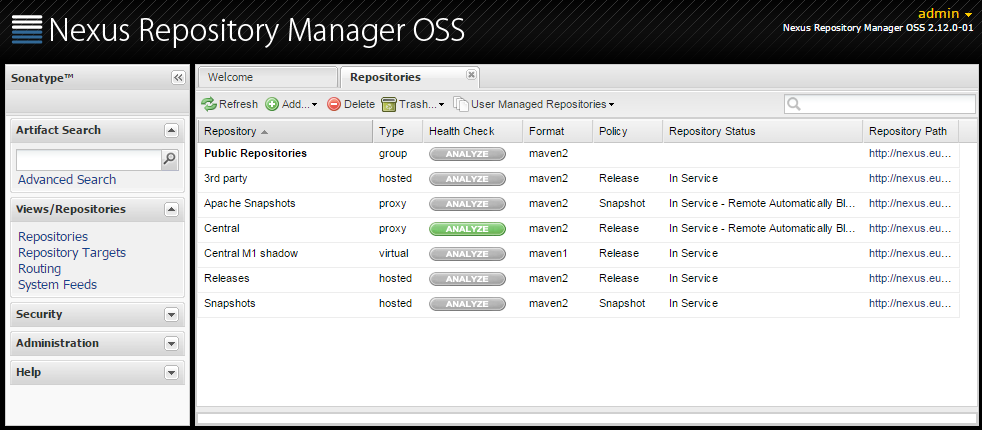

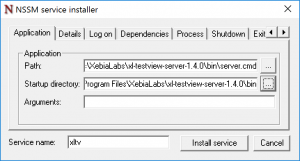

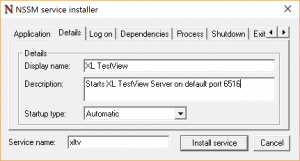

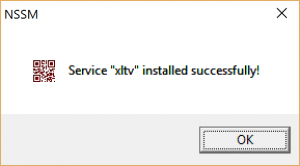

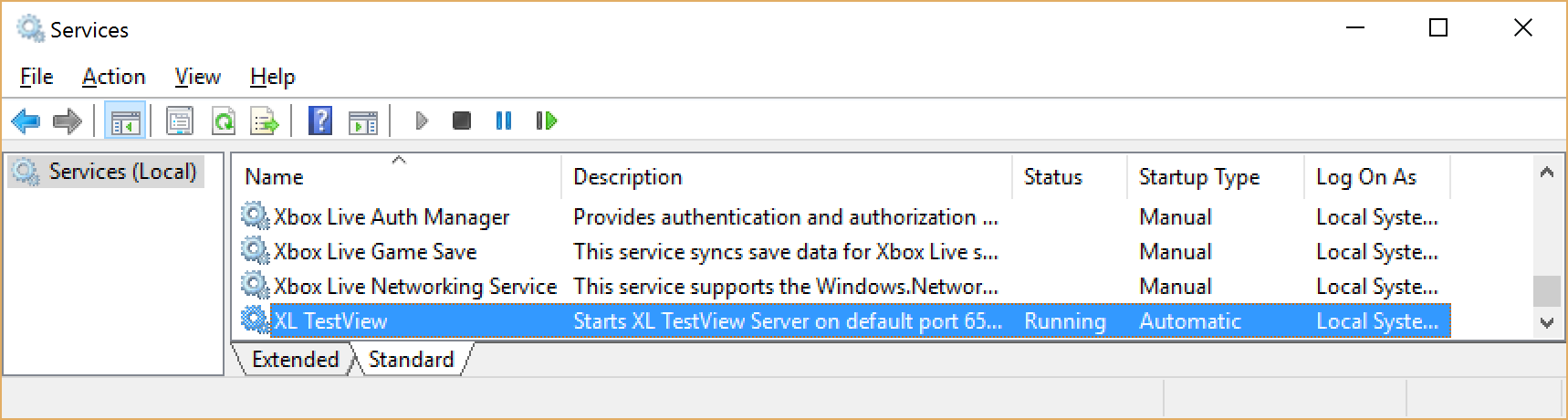

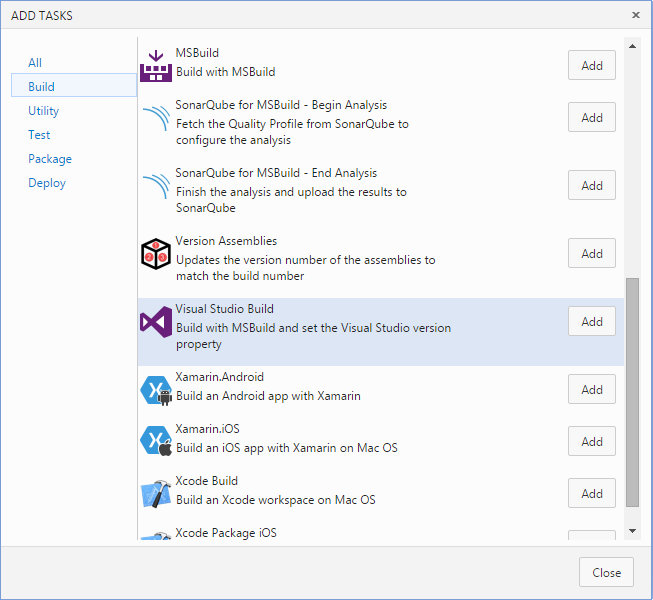

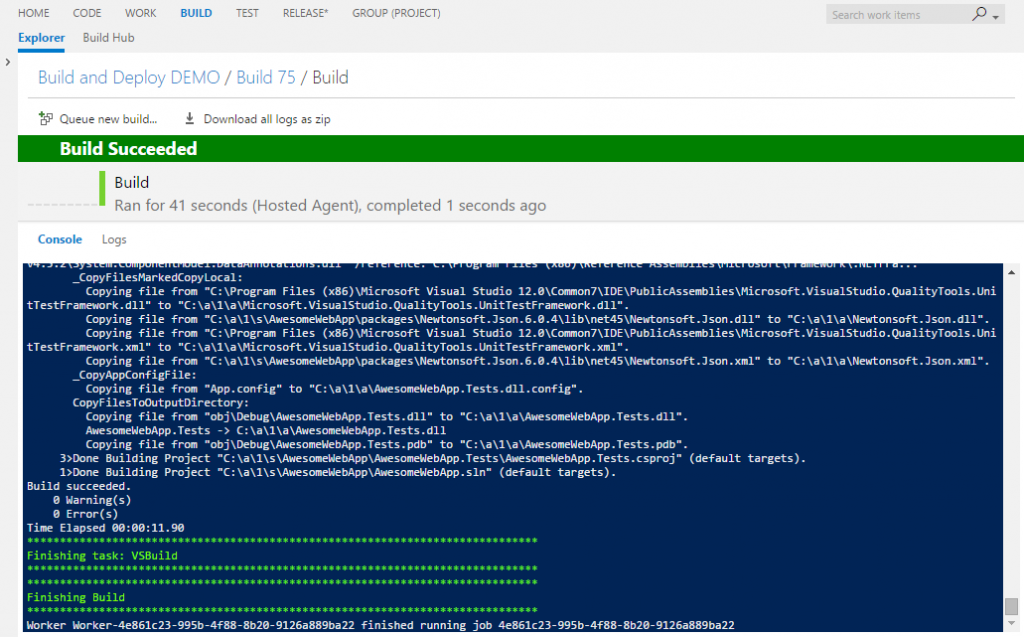

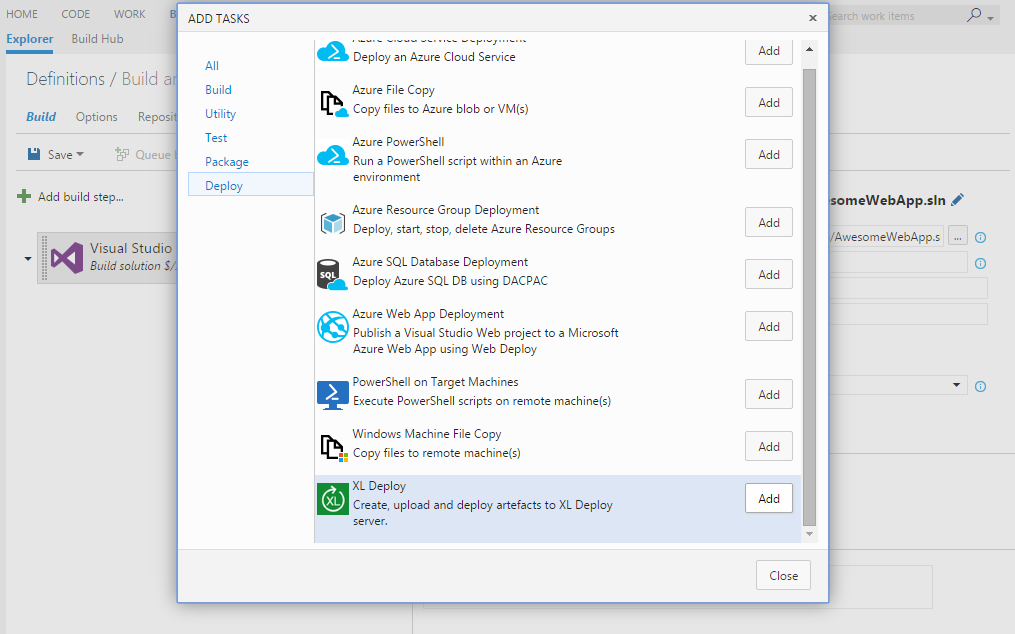

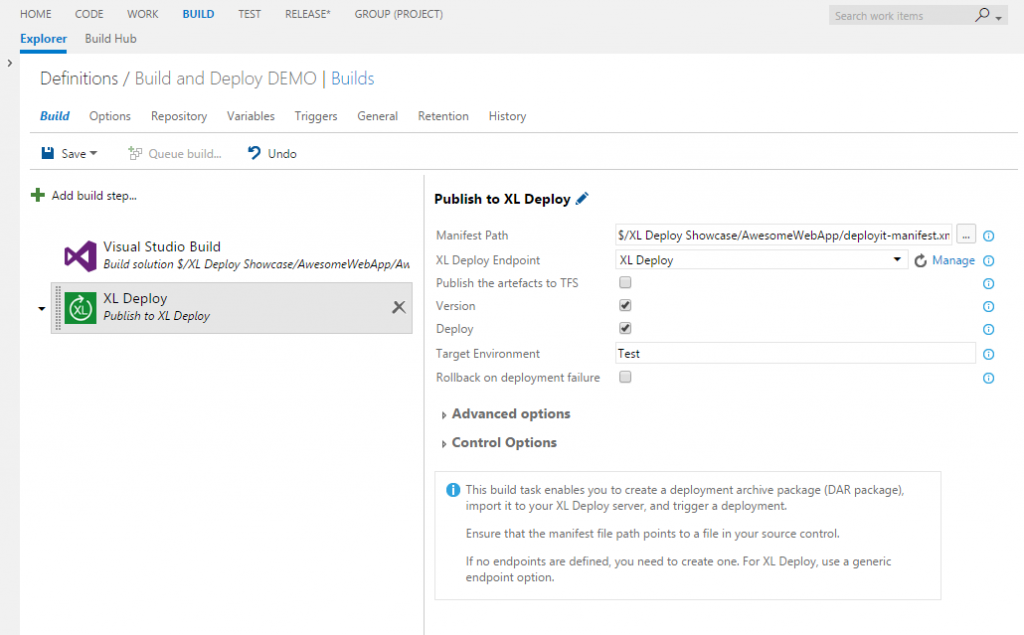

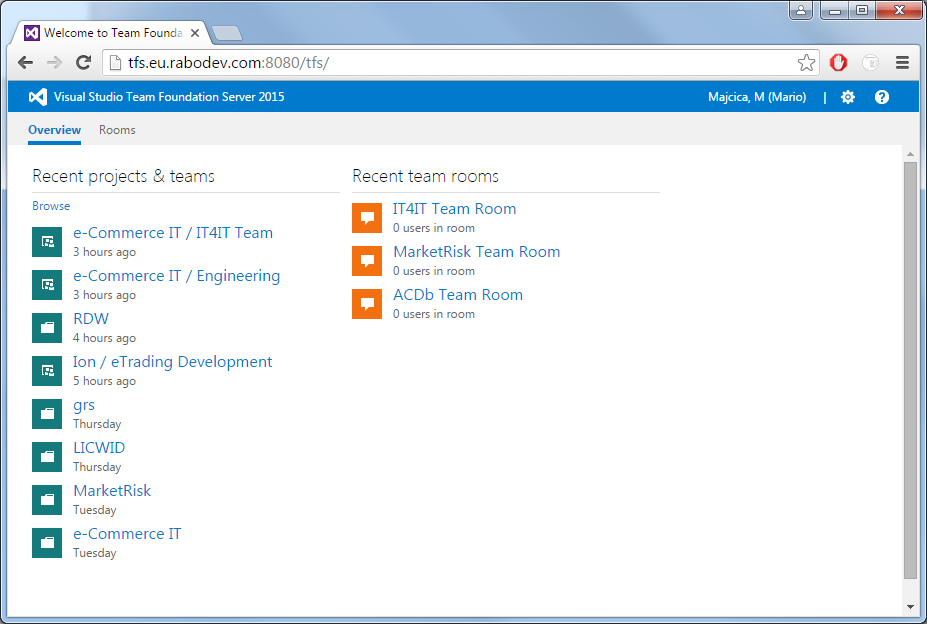

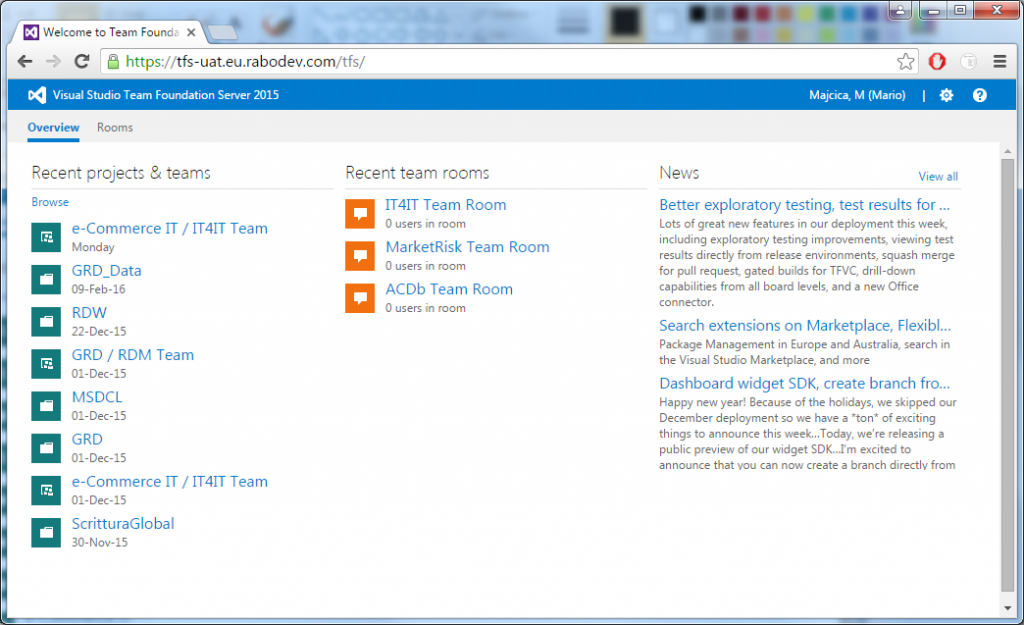

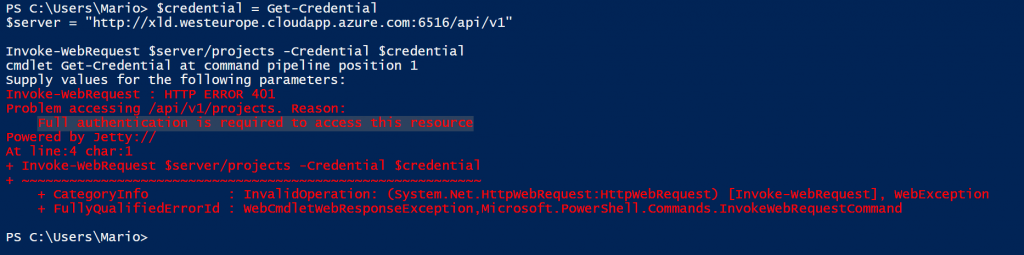

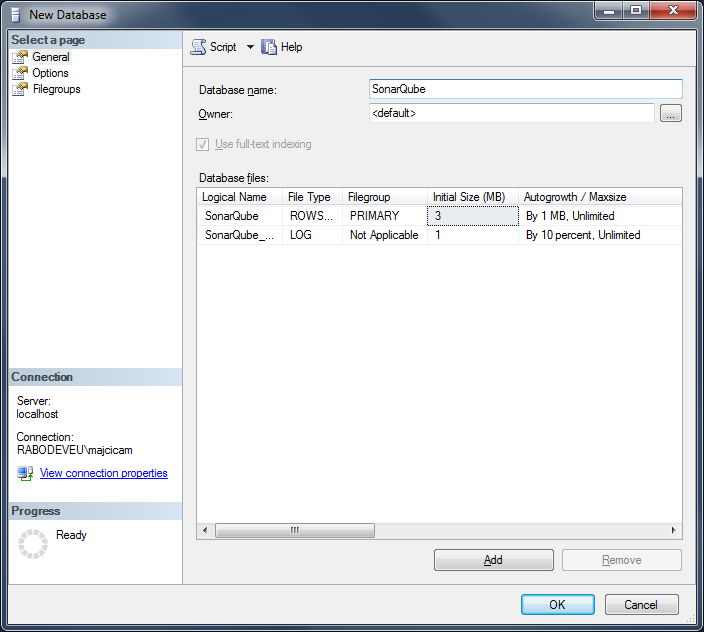

Uploading the package via TFS 2015 build

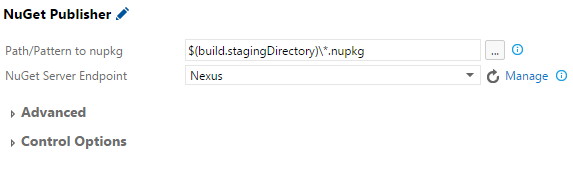

With TFS 2015 Update 1 a new build task called NuGet Publisher is made available. It can help you with uploading your package to any NuGet repository. After adding the above mentioned build task to your build definition you will be presented with the following options:

![nuget_publisher]()

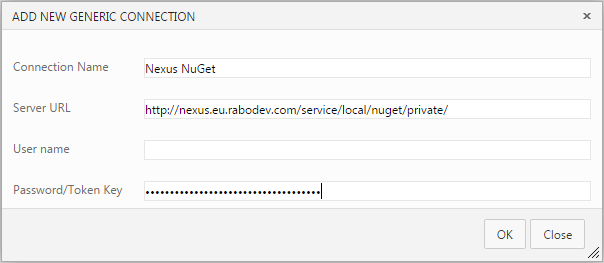

It is sufficient to define a new NuGet Server Endpoint only once. If you choose manage and then add new generic endpoint you will need to specify your feed as server URL, assign an arbitrary name to this endpoint and set you ApiKey as Password/Token Key value.

In our example name is Nexus NuGet, Server URL is http://nexus.maio.local/service/local/nuget/private/ and Password/Token Key is 626d4343-965f-30bc-858e-a322672acf54.

![nexus_endpoint]()

Now that the endpoint is set, we need to specify a minimatch pattern that will point to our NuGet package. In the picture above you can see that I’m pointing my search for all the packages in the the staging folder, where my package is created. This will depend largely on your build definition and what you are trying to achieve.

This is sufficient to upload automatically from your build a new version of your package to our local NuGet server.

In case you are interested in building the package based on your sources and your nuspec file directly by your build definition, you can use NuGet Packager build step to achieve that. This is however out of scope for this blog post.

Uninstall Nexus

Last but not least, removing Nexus. In case you are not satisfied with Nexus you should remove it correctly. Luckily it is trivial.

As for the installation, let’s get into nexus-2.x.x-xx\bin folder. Following are the commands to execute and the relative output.

D:\Nexus\nexus-2.12.0-01\bin>nexus stop

wrapper | Stopping the nexus service...

wrapper | nexus stopped.

D:\Nexus\nexus-2.12.0-01\bin>nexus uninstall

wrapper | nexus removed.

After both of these commands succeeded, you can safely remove the folder for your disk. In case you set a non default path for the repository storage, you can remove that one also.

Conclusion

That’s all folks! I hope I covered all of the arguments you may be interested in when it comes to Nexus Repository Manager OSS and NuGet. I do hope that this will encourage you in using or improve your current NuGet services setup.